The popularity of Generative Artificial Intelligence (GenAI) has been challenging the way we teach and prepare our students for the job market, in both positive and negative ways. When talking to students, I realized that, while they use GenAI extensively, they are unaware of the consequences of their use. Especially when it came to the environmental impact of GenAI use: for highly environmentally-minded students, they were not informed about the environmental consequences of asking a question in ChatGPT.

So I set myself to answer some questions: first, how widespread is the use of GenAI in the workplace? Second, what are the environmental impacts of GenAI, and specifically, what is the carbon footprint of GenAI use?

More people and businesses are incorporating AI in their activities. A survey by PwC set to understand businesses’ perceptions and use of GenAI in their activities. CEOs of different industries were asked various questions about their perceptions of GenAI. CEOs recognize GenAI as both a threat and opportunity, and 70% say they see GenAI impacting their business model in the next three years. Moreover, the survey indicated that the tech industry is likely to see the highest gains from GenAI use: a potential 19 percentage point uplift in operating margin on average, for example, due to cost savings in software development, which is responsible for up to 20% of their costs.

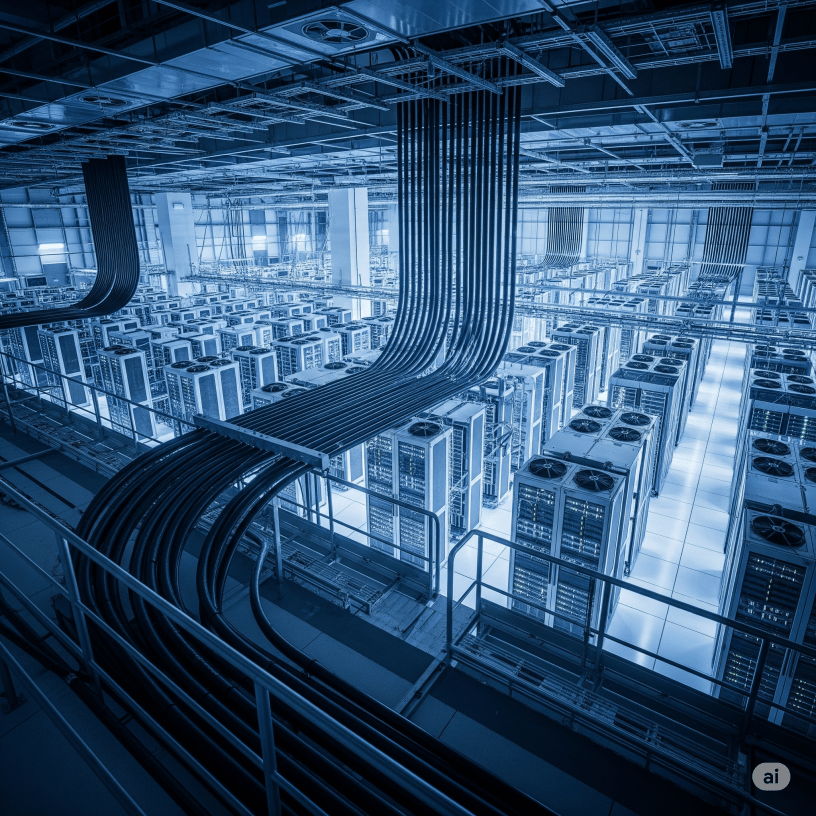

At the same time, GenAI has important environmental impacts. GenAI operation relies on data centers, which are energy-intensive infrastructures that generate, manage, and store information. Due to a variety of factors, including GenAI, the demand for data centers has grown substantially in recent years (Cowls et al., 2021). The larger the number and capacity of data centers, the larger the demand for energy, and consequently GHG emissions, resource use and waste (d’Aramon et al., 2024).

The earliest estimates of the environmental footprint of GenAI were calculated per query, i.e. per request of content production. When it came to energy use, Landwehr (2023) estimated that a query in ChatGPT uses 0.00119 to 0.01292 kWh per query. Brown et al. (2020) estimated energy usage of GPT-3 of 0.4 kWh of electricity to generate 100 pages of content, roughly 0.004 kWh per page.

When it comes to the Carbon Footprint, the estimates depend on the location of data centers, number of words assumed by query, GenAI model, etc (d’Aramon et al., 2024). The Carbon footprint can also be estimated either for training of GenAI, or inference/use. For training, Cowls et al (2021) is one of the earlier papers that estimates the carbon footprint of training ChatGPT-3 from OpenAI, and concludes that “one training run would emit as much as 49 cars”. For inference, earlier estimates for ChatGPT were between 27.4 gCO2e per query in Denmark (Landwehr, 2023), 0.382 g CO2e per query in US (Blog ref here), or 1.276 gCO2e per query hosted in Western USA (Blog ref here). In January 2023, “ChatGPT’s estimated consumption correspond to the monthly electricity consumption of between 8,762 and 175,234 Danes.” (Blog ref here).

Environmental impacts of GenAI go beyond CO2 emissions: Li et al. (2023) estimates AI’s total water footprint, including both operational water and embodied water. They use ChatGPT-3 model as an example and predict that ”the global AI demand is projected to account for 4.2 – 6.6 billion cubic meters of water withdrawal in 2027”. They also conclude that GenAI training conducted in the US can be responsible for the consumption of 700,000 liters of clean freshwater.

A couple of months ago, d’Orgeval et al. (2025) assessed the environmental footprint of Generative AI (GenAI) by conducting Life Cycle Assessment. They include a variety of impacts: carbon emissions (kg CO2-eq), finite mineral and metal depletion, fuel depletion, soil acidity, waste disposal (kg), water use (m3) and ozone depletion (kg CFC-11-eq). While they focus on both inference and training of GenAI, I focus here on the inference results only. They evaluate the entire data center life cycle, not only focusing on the servers, to capture a more complete picture of the environmental implications: from IT equipment to cooling, power infrastructure, manufacturing, operation and end-of-life.

They find that, depending on where in the world you place and train these servers, you get a different environmental footprint (e.g. UK or France versus China). For example, in data centers were located in the Netherlands, the environmental impact of one query are 13.9 gCo2-eq, 4.9 liters of water and 0.004 kg of waste disposed, to name a few. Different estimates are available for different countries.

This is an evolving field of research, but helps to inform on the magnitude of environmental impacts from GenAI use. Rather than recommending students and the general public from using or refraining from using GenAI, my take-away message is that one should be informed about the environmental impacts from using GenAI, and using these tools sensibly.

References:

Landwehr, T. Der Energiehunger der KIs. Süddeutsche Zeitung. Accessed on 21-06-2023. https://www.sueddeutsche.de/wissen/chat-gpt-energieverbrauch-ki-1.5780744.

d’Aramon, I., Ruf, B., & Detyniecki, M. (2024). Assessing carbon footprint estimations of ChatGPT. In Renewable Energy Resources and Conservation (pp. 127-133). Cham: Springer Nature Switzerland.

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel Ziegler, Jeffrey Wu, Clemens Winter, Chris Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. Language models are few-shot learners. In H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 1877–1901. Curran Associates, Inc., 2020.

Cowls, J., Tsamados, A., Taddeo, M., & Floridi, L. (2021). The ai gambit: leveraging artificial intelligence to combat climate change—opportunities, challenges, and recommendations. AI &Amp; SOCIETY, 38(1), 283-307. https://doi.org/10.1007/s00146-021-01294-x

Li, P., Yang, J., Islam, M. A., & Ren, S. (2023). Making ai less” thirsty”: Uncovering and addressing the secret water footprint of ai models. arXiv preprint arXiv:2304.03271.

d’Orgeval, A., SHEEHAN, S., AVENAS, Q., ASSOUMOU, E., & SESSA, V. Generative Ai Impact Assessment Through a Life Cycle Analysis of Multiple Data Center Typologies. Available at SSRN 5234773.

Cover Image: Generated with Gemini AI by Brooks Kaiser using the prompt “illustrate a data center and power going in to it”. When asked ‘how much electricity did it take to generate that image?” this was the reply:

It’s difficult to give an exact figure for how much electricity it took to generate that specific image, as it depends on many factors, including:

- The specific AI model used: Different image generation models have varying levels of complexity and efficiency.

- The hardware used: The type of GPU (Graphics Processing Unit) and other computing infrastructure significantly impacts energy consumption.

- The inference time: How long the model takes to process the request and generate the image.

- The number of images generated in one “session”: Sometimes models are optimized for batch generation, which can impact per-image energy use.

- Overhead from the data center: This includes energy for cooling, other auxiliary systems, and general data center operations.

However, based on research into AI image generation energy consumption:

- Studies suggest that creating a single AI image can consume anywhere from 0.01 to 0.29 kilowatt-hours (kWh) of energy.

- Some sources compare this to charging a smartphone, stating that a few AI-generated images can consume as much energy as fully charging a smartphone (which is around 0.012 kWh).

- More powerful models like Stable Diffusion XL might use between 0.06-0.29 kWh per 1,000 images, which translates to a very small amount per individual image (0.000086–0.00029 kWh). However, other estimates are higher.

It’s important to note that while the energy for a single image might seem small, the collective impact of millions of AI-generated images daily adds up significantly. The overall energy consumption of data centers, driven in part by generative AI, is a growing concern for environmental impact due to electricity demand and associated carbon emissions, as well as water consumption for cooling.

I decided not to try to refine the image.